using azure ai foundry hosted models in cline or roo code

why

Refer to my last post

steps

Note: The screenshots are all from cline. Since Roo is a fork of Cline, the UI and the steps are very similar to that of Cline’s.

Two ways to do this:

Use models integrated to Github Copilot

1) Carry out the steps described to integrate AI Foundry models with Github Copilot described in my last post

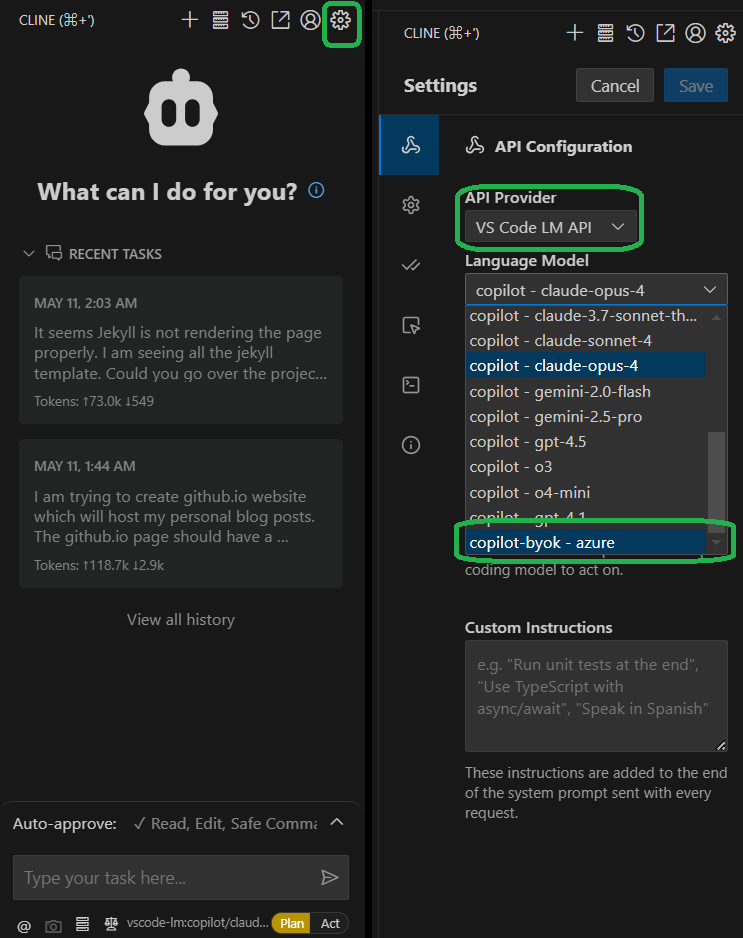

2) Use it in Cline by clicking settings and selecting VS Code LM API.

Add the models directly to cline

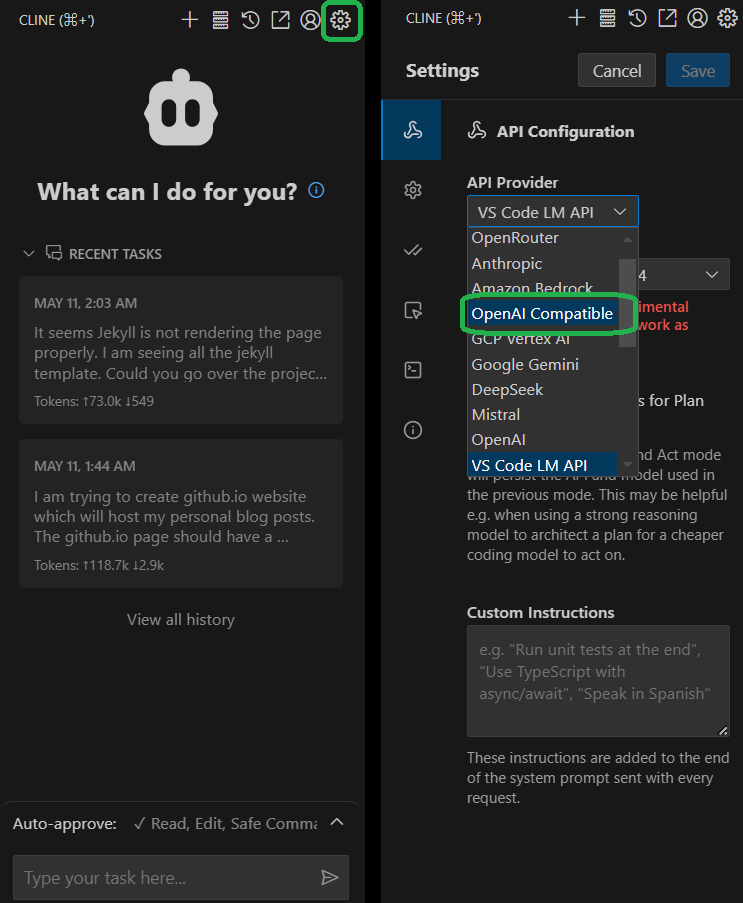

1) Click settings in Cline, and select OpenAI Compatible from the dropdown.

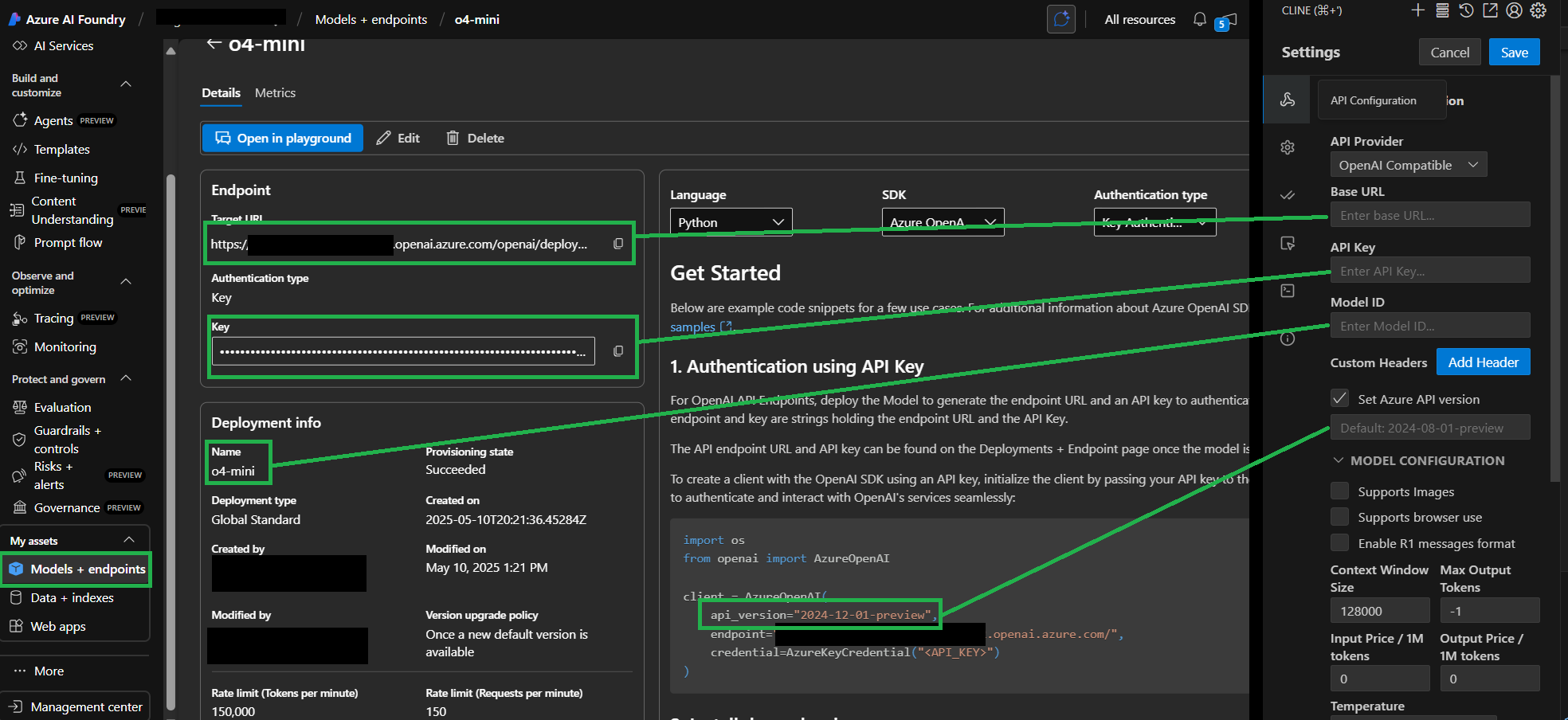

2) Go to your AI Foundry deployment and fill out the information needed.

In both Roo and Cline, for the Base URL field the Target URI copied from AI Foundry needs to be modified, to make it adhere to this format:

https://<RESOURCE NAME>.openai.azure.com/openai/deployments/<DEPLOYMENT NAME>

e.g., for my deployment the Base URL I extracted from AI Foundry Target URI was as follows.

Target URI: https://<RESOURCE NAME>.openai.azure.com/openai/deployments/o4-mini/chat/completions?api-version=2025-01-01-preview

Base URL: https://<RESOURCE NAME>.openai.azure.com/openai/deployments/o4-mini

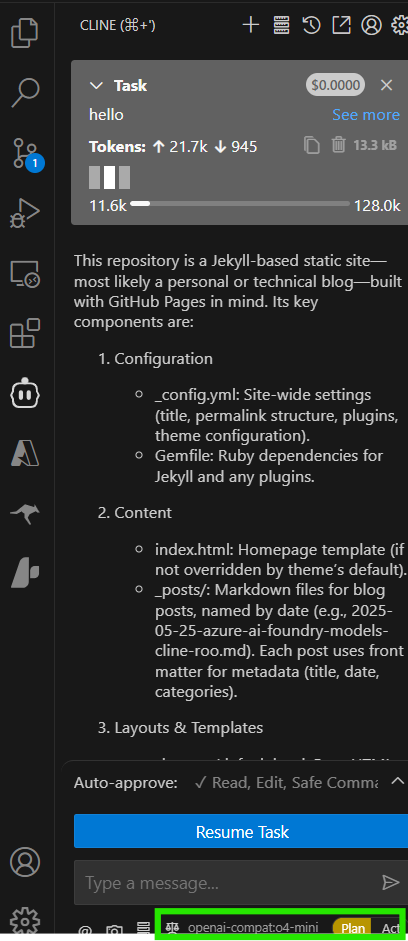

After completion:

Note: These features are still a work in progress, and newer models may not be fully supported yet.