using azure ai foundry hosted models for github copilot

why bother

Why would we want to use Azure AI Foundry-hosted models as the base model for GitHub Copilot? There are a few good reasons.

Sometimes, we might hit the dreaded quota limit, which seems to apply even to enterprise GitHub Copilot subscriptions. Or maybe we are resource constrained and don’t want to pay for both Azure AI Foundry models and GitHub Copilot seats.

Another case: we might be working on a sensitive codebase and don’t want to share our code with whichever server Copilot uses for its default base models. This is pretty common in large organizations. Since Azure AI Foundry models keep data and prompts within the tenant, they make a perfect backing LLM.

steps

This took me some experimenting to get it to work. And did not find any official documentation for this.

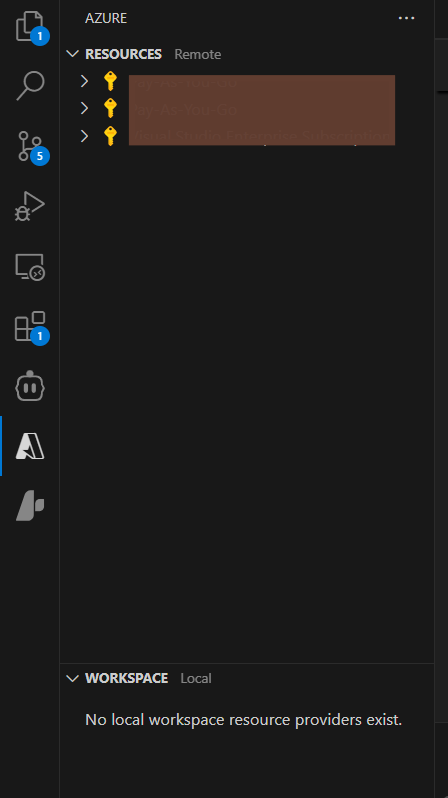

1) Install the Azure Extension in VS Code. And sign in and make sure you can see your subscriptions listed.

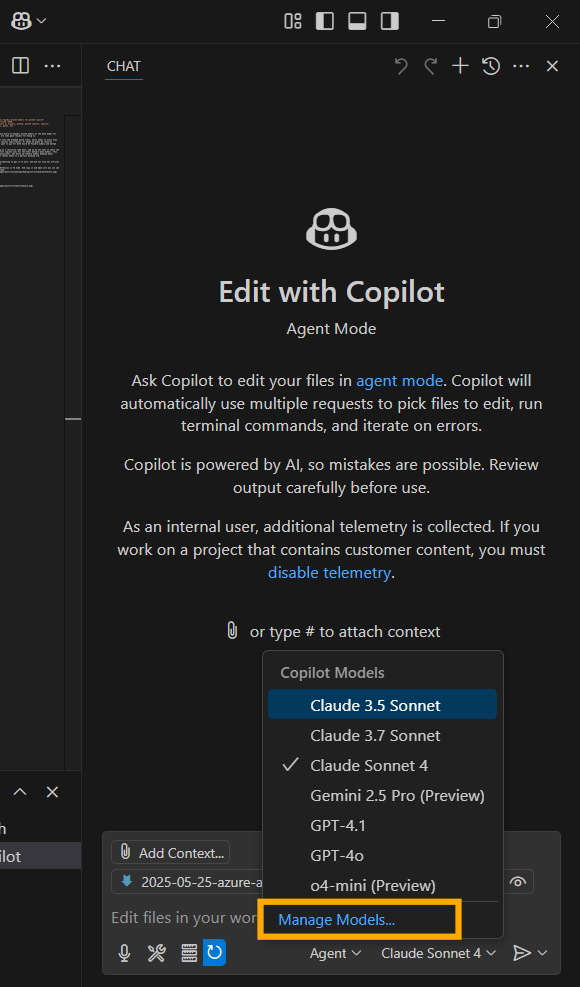

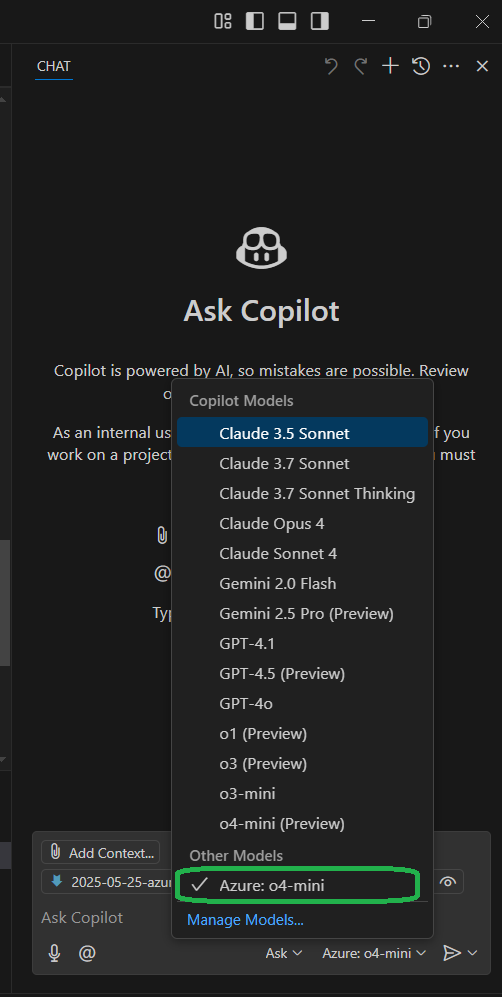

2) Open the Github Copilot (Ctrl + Alt + i) and click on manage models in the model selector dropdown.

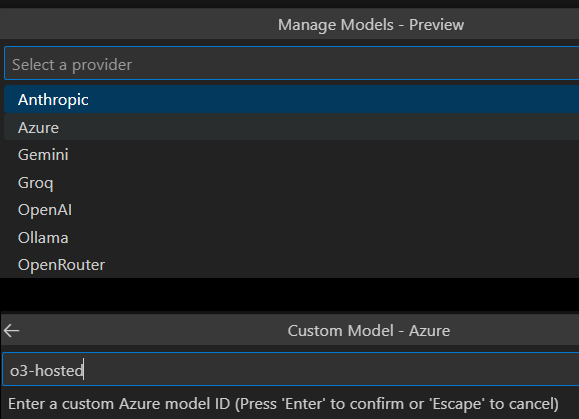

3) Select Azure and write a model id that will be used to identify the model.

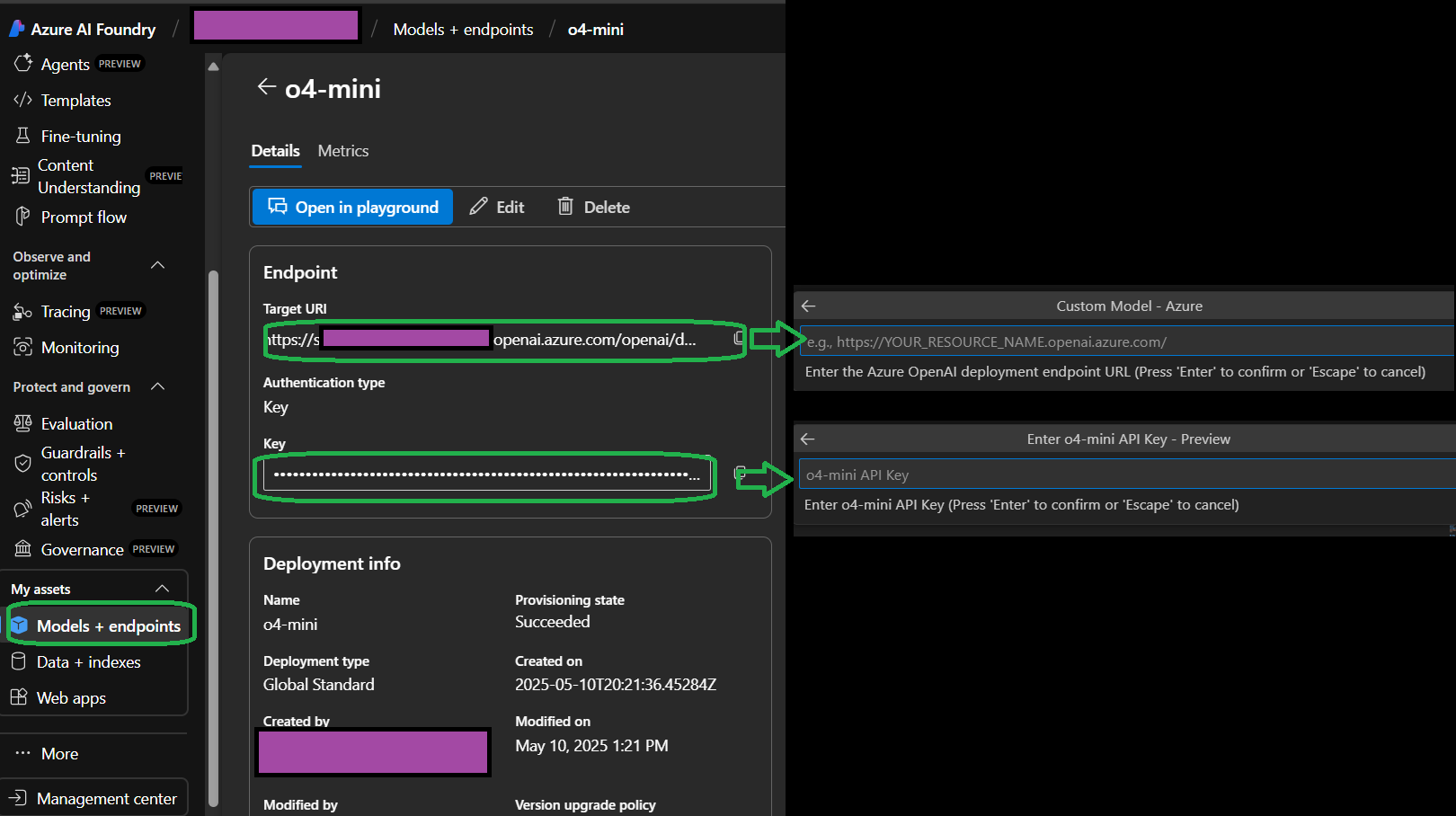

4) Get the AI Foundry model target uri and API key from AI Foundry and paste it.

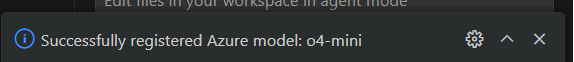

After these steps the model is ready to be used. A pop up usually appears indicating the success/failure.

The model can then be selected from the model selector drop down.

And now, we can code away!

Note: These features are still a work in progress, and newer models may not be fully supported yet.